Problem Description / Requirement

Your customer is using new software in the company. This software uses its own logic for protocol measures and locks because – presumably – the concept of intelligent locking is not known.

Before each query is called, the name of the object used, along with the time stamp and user name, is entered in the [dbo].[locks] table. As soon as the process is finished, the entry is removed again. The customer notices that after about 1 hour, access to the [dbo].[locks] table becomes so slow that the application shows noticeable failure symptoms.

Through “trial and error” it was determined that a REBUILD INDEX on the table causes the application to behave as usual again. For this reason, the customer has implemented an SQL Agent job in its system that rebuilds all indexes in the [dbo].[locks] table once an hour. Since the table is not very large, the job is executed for the application without any problems.

The vendor has no idea why this behavior occurs.

The typical statement: “You are the only customer experiencing this behavior”

Challenge

The customer contacts you for a root cause analysis of the behavior because the manufacturer has no explanation for it. Your task is:

- to find out why the performance on the table is continuously decreasing although only a few records are stored..

- to work out a solution to maintain consistent performance.

Condition

- You can use indexes of your choice for the table [dbo].[locks].

- You can customize the stored procedure for the stress test (see below) as you wish

- The metadata of the table must NOT be changed!

Technical Information

The challenge creates two objects in your database:

- Table [dbo].[locks]

- Stored procedure: [dbo].[SQLQueryStress_stress_test]

The table [dbo].[locks] contains a maximum of 200 records, which are continuously deleted by the stored procedure and then added again.

This is intended to simulate a workload that represents many users and accesses.

How to start the challenge

When you are connected to the SQL Server instance, run the following commands:

EXECUTE sqlchallenge.dbo.create_challenge 'list'; |

will give you a list of open challenges |

EXECUTE sqlchallenge.dbo.create_challenge 'name_of_challenge'; |

will create the challenge for you |

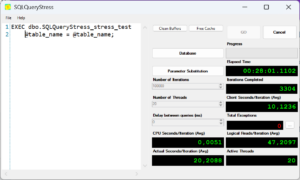

The SQLQueryStress tool is recommended for simulating the workload.

https://github.com/ErikEJ/SqlQueryStress

For this challenge, I have provided a template for SQLQueryStress that already has all settings (except the database name) preset.

Before loading the template, simply replace the name of the database in the JSON file with your own database (generated when the challenge is created). The template can then be loaded.

Note:

When you start SQLQueryStress note the increasing values of

- “Logical Reads”

- “Actual Seconds/Iteration (Avg)”

SQLQueryStress - JSON Template

{

"CollectIoStats":true,

"CollectTimeStats":true,

"CommandTimeout":0,

"ConnectionTimeout":15,

"DelayBetweenQueries":0,

"EnableConnectionPooling":true,

"ForceDataRetrieval":false,

"KillQueriesOnCancel":true,

"MainDbConnectionInfo":

{

"AdditionalParameters":"",

"ApplicationIntent":0,

"AzureMFA":false,

"ConnectTimeout":15,

"Database":"REPLACE THIS STRING WITH THE NAME OF YOUR DATABASE",

"EnablePooling":true,

"EncryptOption":"True",

"IntegratedAuth":false,

"Login":"sqlchallenge",

"MaxPoolSize":2,

"Password":"sqlchallenge#2025!",

"Server":"sqlchallenges.germanywestcentral.cloudapp.azure.com",

"TrustServerCertificate":true

},

"MainQuery":"EXEC dbo.SQLQueryStress_stress_test @table_name = @table_name;",

"NumIterations":100000,

"NumThreads":20,

"ParamDbConnectionInfo":

{

"AdditionalParameters":"",

"ApplicationIntent":0,

"AzureMFA":false,

"ConnectTimeout":0,

"Database":"",

"EnablePooling":true,

"EncryptOption":"False",

"IntegratedAuth":true,

"Login":"",

"MaxPoolSize":0,

"Password":"",

"Server":"(local)",

"TrustServerCertificate":false

},

"ParamMappings":

[

{"Key":"@table_name",

"Value":"table_name"}

],

"ParamQuery":"SELECT table_name FROM dbo.locks;",

"ShareDbSettings":true

}customer expectations

The customer wants to know why the observed behavior occurs. As a result, the SQL Agent job with its hourly execution should be deactivated in order to make the resources available for other tasks.

Solution

This challenge is much more complicated because several components of Microsoft SQL Server interact with each other.

– Read Committed Snapshot Isolation

– NONSargable arguments for the WHERE clause

– Incorrect indexes

If you want to know where the problem lies with this challenge, you can click on the link below.

Connect to Microsoft SQL Server

| SQL Server: | sqlchallenges.germanywestcentral.cloudapp.azure.com |

| Port: | 1433 |

| Version: |

16.0.4165.4

|

| Edition: | Developer Edition |

| Authentication Type: | SQL Server Authentication |

| Login Name: | sqlchallenge |

| Password: | sqlchallenge#2025! |

| Encryption | Mandatory |

| Trust Server Certificate: | True |

During this workshop, you’ll gain the expertise and confidence needed to diagnose and fine-tune slow-performing queries, resulting in improved database responsiveness and end-user satisfaction. Whether you’re an experienced database administrator, developer, or data analyst, this workshop will equip you with the knowledge and practical skills to tackle the most challenging query optimization tasks.

In this workshop, we will cover a wide range of topics and techniques that are essential for modern database developers working with SQL Server. From fundamental concepts to advanced best practices, you’ll gain the knowledge and practical experience needed to excel in your role.

It is very quiet here, at the level 400 😉

Let me start:

As for RCA, I fail miserably (gone are days when I knew all this stuff).

As for the solution, I see very nice results after changing code and replacing index scan (clustered primary key) with index seek (new nonclustered index).

I hope I got the UPPER logic right.

With the new index and code change, I am getting 16 logical reads per iteration consistently and no issues(?) at all.

Without RCA, I can not say it is solved.

Waiting impatiently for an explanation!

ALTER PROCEDURE dbo.SQLQueryStress_stress_test

@table_name NVARCHAR(128)

AS

BEGIN

SET NOCOUNT ON;

SET XACT_ABORT ON;

DECLARE @version_store_mb BIGINT = 0;

BEGIN TRANSACTION;

DELETE dbo.locks

WHERE table_name = UPPER(@table_name)

AND locked_by = SUSER_SNAME();

WAITFOR DELAY ’00:00:00.500′;

INSERT INTO dbo.locks

(table_name, date_locked, application, locked_by)

VALUES

(UPPER(@table_name), GETDATE(), APP_NAME(), SUSER_SNAME());

COMMIT TRANSACTION

END

GO

CREATE NONCLUSTERED INDEX Locks_TableName_LockedBy on dbo.Locks(table_name, locked_by);

GO

Dear Art,

if I could rate it from 0 – 100 I would give you 95 points.

I love your approach to make sure that the table name will ALWAYS be stored in UPPER cases.

It is not necessary in this scenario but better than my approach because my solution will bet on Case Insensitive collations 🙂

One hint: You don’t need the non clustered index anymore because the PRIMARY KEY can be the same definition as your nonclustered index. With this concept you only need to update one single index (table).

I reach 4 IO only per iteration.

BUT – the most important point here is that you covered it as an RCSI problem!

Thank you so much for accepting the challenge.

Best from Germany, Uwe

Thank you Uwe, you are very generous!

The indexes on the table do raise one’s eyebrows.

Looking back, I see that changing PK was allowed (somehow I categorised it under “The metadata of the table must NOT be changed!”).

As for RCSI, I have missed it completely – I copied objects and data to my computer (did not want to run SqlQueryStress from across the pond).

Thank you for the challenge, looking forward to the next one!

Art